The driver of any vehicle makes a huge number of decisions in a minute. And you also have to monitor the situation very carefully. Cars around, road markings and signs, correct use of controls - doing all this at the same time is not only difficult, but very difficult. While the motorist gains experience and is able to control the situation around him more confidently, a lot of time will pass and there is always the possibility that an accident may occur.

The traffic sign recognition system is designed to help drivers in everyday driving and ease some pressure on the human brain, especially when he is tired behind the wheel.

Traffic Sign Recognition System

SUBJECT AND METHOD

Typical situations for testing devices are abundantly provided by public roads. Highways near Moscow are rich in various speed limits, narrower highways have “lollipops” prohibiting overtaking, and highways and expressways within the city have non-standard signs that the video eye must read at a fairly fast speed. We walked the route twice: in daylight and in the dark. Moreover, during the day the windshield was periodically wetted by rain, and heavy-duty trucks were generously doused with mud. In general, they did not retreat from the realities of the road.

Unlike most electronic assistants, the one responsible for recognizing characters is designed relatively simply. The camera snatches and checks with its card index plates that are similar in shape, set and arrangement of symbols. The scanners do not respond to similar restrictions on the maximum weight or height of the car (also large numbers with a red border). True, it comes to funny things. When overtaking another truck, the Opel suddenly showed “30” on the display. It turned out that the scanner was reading a miniature speed limit sign on the sprinkler tank.

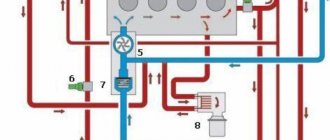

How TSR works

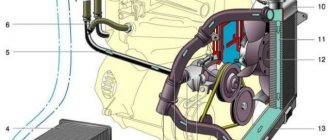

Today, leading car manufacturers (such as Ford, BMW, Audi, Volkswagen, Opel, Mercedes-Benz) install it on their products. The main purpose of this new product is to warn those who are currently driving about the need to maintain the maximum speed. At the moment when the car passes the next sign, the installation records the current speed and how well the driver adheres to it. If not, then the equipment reminds the driver not to exceed the limit. It is interesting that such installations may be called differently by different manufacturers, although they perform similar functions. So, Mercedes Benz calls it Speed Limit Assist (literally, a speed limit control system), Opel calls it Opel Eye (recognized as the best innovation in the field of auto safety for 2010). The manufacturer Volvo called its brainchild Road Sign Information, which in translation sounds like a complex of information about road signs.

ROAD CHECK

The BMW disappoints in the first minutes: it rarely notices “80” signs on the right side of wide Moscow highways. Opel is a little more careful. But it is felt that these conditions are abnormal for the systems: on such a wide road it is necessary to duplicate information on stretch marks, the upper ramp or the dividing barrier.

Outside the city, the assistants were more confident: there was less distracting information, and the signs were much closer. However, this pot of honey was not without its tar. Opel is sensitive to the orientation of the sign. If he is slightly tilted or turned, the camera misses him. BMW has its own quirks. The Bavarian's standard navigation includes speed limits for all roads. If the scanner does not see road signs, the computer relies on road map data. But it would be better if he didn’t do this! Electronic Susanin does not always clearly track the boundaries of settlements, switching the limits “60” and “90”. And sometimes it highlights completely inexplicable restrictions of 50 or 70 km/h, and the change occurs in a forest or open field. The Opel i system is not tied to navigation; it only tracks real roadside information, and therefore misinforms the driver less often.

System-specific limitations

Traffic sign recognition systems are gradually becoming an integral part of many cars. They began to introduce it even into cars in the mid-price segment and into some budget models. Such a large-scale distribution of this system is due to the fact that it can actually reduce the number of accidents on the roads. At the same time, this equipment increases driving comfort. Thanks to him, the driver does not have to constantly monitor road signs.

However, this system still has a number of shortcomings that have not yet been eliminated. This equipment is not capable of recognizing road signs installed in violation of regulations. This drawback is especially relevant for cars equipped with a semi-autonomous or autonomous control system. But, according to the developers, the traffic sign recognition system will be supplemented with artificial intelligence. This will help get rid of this shortcoming.

VISUAL DEFECTS

A short but heavy rain spoiled the Opel's accuracy rating. Drops on the glass that the brushes do not have time to wipe off reduce the assistant’s vigilance by half. The high beams turned on at night had a similar effect on his visual acuity: the electronics consistently ignored signs caught in the headlights. BMW does not allow itself such whims.

But don't expect the system to warn you about the sign in advance. With a declared 100-meter range, the cameras of both Opel and BMW transmit information to the displays no earlier than the sign reaches the front bumper. And the “Bavarian” even takes a theatrical pause and issues a message only when a column looms in the rearview mirror. Moreover, this does not depend on weather conditions or time of day.

What is KIA variant coding?

Published: September 23, 2019

KIA variant coding. This is another variation of the name “ECU configuration”. Today we will try to remove the veil of secrecy from this mysterious process. And among other things, explain what variant coding is. Also why is it needed or not needed. And what exactly has the variant Read more…

no comments yet

Kirill Mileshkin

“It is impossible to view the colored symbols of the prohibition of overtaking and its cancellation that appear on the head-up display, but the real signs on the side of the roads are easy to see. Darkness, drops or dirt on the windshield are no problem for the camera. I wish I had such sharp eyes! As a result, BMW is superior to Opel in two key parameters - scanning quality and information presentation. As for the incorrect navigation prompts about the speed limit, it never overestimated them relative to the real limit.”

Maxim Sachkov:

Stage 1. Color image segmentation

Image capture. Search for red and white colors

A unique characteristic of prohibition signs is a predominantly white circle with a red outline, allowing them to be identified in images. After we get the RGB frame from the camera, we crop the 512 by 512 image (Figure 1) and highlight the red and white colors, discarding all the others.

For color localization - identifying elements of a specific color - the RGB format is very inconvenient, because pure red color is very rare in nature, but almost always comes with admixtures of other colors. In addition, the color changes hue and brightness depending on the lighting. So, for example, at sunrise and sunset, all objects acquire a red tint; twilight and twilight also give their own shades.

Rice. 1. An image in RGB format with a size of 512 x 512, received as the input of the algorithm.

However, we first tried to solve the problem using the original RGB format. To highlight the red color, we set the upper and lower thresholds: R > 0.7, and G and B < 0.2. But the model turned out to be not very convenient, because... The values of the color channels were highly dependent on illumination and time of day. For example, the values of the red RGB channels are very different on sunny and cloudy days.

Therefore, we converted the RGB model to the HSV/B color model, in which the color coordinates are: hue (Hue), saturation (Saturation) and brightness (Value / Brightness).

The HSV/B model is usually represented by a color cylinder (Fig. 2). It is convenient in that the color shades in it are only invariants of various types of lighting and shadows, which naturally simplifies the task of highlighting the required color in the image, regardless of conditions, such as time of day, weather, shadow, location of the sun, etc.

Shader code for transition from RGB to HSV/B:

varying highp vec2 textureCoordinate; precision highp float; uniform sampler2D Source; void main() { vec4 RGB = texture2D(Source, textureCoordinate); vec3 HSV = vec3(0); float M = min(RGB.r, min(RGB.g, RGB.b)); HSV.z = max(RGB.r, max(RGB.g, RGB.b)); float C = HSV.z - M; if (C != 0.0) { HSV.y = C / HSV.z; vec3 D = vec3((((HSV.z - RGB) / 6.0) + (C / 2.0)) / C); if (RGB.r == HSV.z) HSV.x = Db - Dg; else if (RGB.g == HSV.z) HSV.x = (1.0/3.0) + Dr - Db; else if (RGB.b == HSV.z) HSV.x = (2.0/3.0) + Dg - Dr; if ( HSV.x < 0.0 ) { HSV.x += 1.0; } if ( HSV.x > 1.0 ) { HSV.x -= 1.0; } } gl_FragColor = vec4(HSV, 1); }

Rice. 2 . Color cylinder HSV/B.

To highlight the color red, we construct three intersecting planes that form an area in the HSV/B color cylinder corresponding to the color red. The task of highlighting white color is simpler, because... the white color is located in the central part of the cylinder and we just need to specify the threshold along the radius (S axis) and height (V axis) of the cylinder, which form the area corresponding to the white color.

The shader code that performs this operation is:

varying highp vec2 textureCoordinate; precision highp float; uniform sampler2D Source; //parameters that define plane const float v12_1 = 0.7500; const float s21_1 = 0.2800; const float sv_1 = -0.3700; const float v12_2 = 0.1400; const float s21_2 = 0.6000; const float sv_2 = -0.2060; const float v12_w1 = -0.6; const float s21_w1 = 0.07; const float sv_w1 = 0.0260; const float v12_w2 = -0.3; const float s21_w2 = 0.0900; const float sv_w2 = -0.0090; void main() { vec4 valueHSV = texture2D(Source, textureCoordinate); float H = valueHSV.r; float S = valueHSV.g; float V = valueHSV.b; bool fR=(((H>=0.75 && -0.81*H-0.225*S+0.8325 <= 0.0) || (H <= 0.045 && -0.81*H+0.045*V-0.0045 >= 0.0)) && ( v12_1*S + s21_1*V + sv_1 >= 0.0 && v12_2*S + s21_2*V + sv_2 >= 0.0)); float R = float(fR); float B = float(!fR && v12_w1*S + s21_w1*V + sv_w1 >= 0.0 && v12_w2*S + s21_w2*V + sv_w2 >= 0.0); gl_FragColor = vec4(R, 0.0, B, 1.0); }

The result of the shader that highlights red and white colors in a 512 x 512 image is shown in Fig. 2. However, as computational experiments have shown, for further work it is useful to reduce the image resolution to 256 by 256, because this improves productivity and has virtually no effect on the quality of character localization.

Rice . 3. Red and white image.

Finding circles in an image

Most circle search methods work with binary images. Therefore, the red-white image obtained in the previous step needs to be converted into binary form. In our work, we relied on the fact that on prohibitory signs the white background color borders the red outline of the sign, and we developed an algorithm for a shader that looks for such borders in a red-white image and marks the border pixels as 1, and not the border pixels as 0.

The algorithm works as follows:

- the adjacent pixels of each red pixel in the image are scanned;

- if there is at least one white pixel, then the original red pixel is marked as the boundary pixel.

Thus, we get a black and white image (256 x 256), in which the background is filled with black, and the supposed circles are filled with white (Fig. 4a).

Rice. 4a . A binary image that displays the boundaries of red and white.

To reduce the number of false points, it is useful to apply morphology (Fig. 4b).

Rice. 4b . The same image, but after applying morphology.

Next, you need to find circles in the resulting binary image. First, we decided to use the Hough Circles Transform method, implemented on the CPU in the OpenCV library. Unfortunately, as computational experiments have shown, this method overloads the CPU and reduces performance to an unacceptable level.

A logical way out of this situation would be to transfer the algorithm to GPU shaders, however, like other methods for finding circles in images, the Huff method does not fit well with the shader-approach paradigm. Thus, we had to turn to a more exotic method of searching for circles - the Fast Circle Detection Using Gradient Pair Vectors method [1], which shows higher performance on the CPU.

The main steps of this method are as follows:

1. For each pixel of a binary image, a vector is determined that characterizes the direction of the brightness gradient at a given point. These calculations are performed by a shader that implements the Sobel operator:

varying highp vec2 textureCoordinate; precision highp float; uniform sampler2D Source; uniform float Offset; void main() { vec4 center = texture2D(Source, textureCoordinate); vec4 NE = texture2D(Source, textureCoordinate + vec2(Offset, Offset)); vec4 SW = texture2D(Source, textureCoordinate + vec2(-Offset, -Offset)); vec4 NW = texture2D(Source, textureCoordinate + vec2(-Offset, Offset)); vec4 SE = texture2D(Source, textureCoordinate + vec2(Offset, -Offset)); vec4 S = texture2D(Source, textureCoordinate + vec2(0, -Offset)); vec4 N = texture2D(Source, textureCoordinate + vec2(0, Offset)); vec4 E = texture2D(Source, textureCoordinate + vec2(Offset, 0)); vec4 W = texture2D(Source, textureCoordinate + vec2(-Offset, 0)); vec2 gradient; gradient.x = NE.r + 2.0*Er + SE.r - NW.r - 2.0*Wr - SW.r; gradient.y = SW.r + 2.0*Sr + SE.r - NW.r - 2.0*Nr - NE.r; float gradMagnitude = length(gradient); float gradX = (gradient.x+4.0)/255.0; float gradY = (gradient.y+4.0)/255.0; gl_FragColor = vec4(gradMagnitude, gradX, gradY, 1.0); }

All non-zero vectors are grouped by directions. Due to the discrete nature of the binary image, a total of 48 directions are obtained, i.e. 48 groups.

2. In groups, pairs of oppositely directed vectors V1 and V2 are searched, for example, 45 degrees and 225. For each pair found, the conditions are checked (Fig. 5):

- the beta angle is less than a certain threshold

- the distance between points P1 and P2 is less than the specified maximum diameter of the circle and greater than the minimum.

If these conditions are met, then it is considered that point C, which is the middle of the segment P1P2, is the intended center of the circle. Next, this point C is placed in the so-called accumulator.

3. The accumulator is a 3D array of size 256 x 256 x 80. The first two dimensions (256 x 256 - the height and width of the binary image) correspond to the expected centers of the circles, and the third dimension (80) represents the possible radii of the circles (maximum - 80 pixels). Thus, each gradient pair accumulates a response at a certain point corresponding to the assumed center of a circle with a certain radius.

Rice. 5 . A pair of vectors V1-V2 and the estimated center of the circle C.

4. Next, the accumulator searches for centers in which at least 4 pairs of vectors with different directions responded, for example, pairs 0 and 180, 45 and 225, 90 and 270, 135 and 315. Centers close to each other are combined. If several centers of circles with different radii are found at one point of the accumulator, then these centers are also combined and the maximum radius is taken.

The result of the circle search algorithm is shown in Fig. 6.

Rice. 6. Localized circles corresponding to two prohibition signs.

Maxim Sachkov

“I would prefer the Opel Eye. The Bavarian’s electronic assistant resembles an overly caring grandmother, carefully protecting her beloved grandson from all kinds of misfortunes, and his fellow countryman from Rüsselsheim resembles a young father who provides the child with enough freedom and only backs up in dangerous situations. I prefer it when a person relies on himself and does not constantly expect help from others. Although such people will also need practical advice in difficult times.”

Building a neural network

The Keras library will be used to create a neural network]. To classify images into appropriate categories, we will build a CNN (Convolutional Neural Network) model. CNN is best suited for image classification purposes.

The architecture of our model:

- 2 Conv2D layers (filter=32, kernel_size=(5,5), activation=”relu”)

- MaxPool2D layer ( pool_size=(2,2))

- Dropout layer (rate=0.25)

- 2 Conv2D layers (filter=64, kernel_size=(3,3), activation=”relu”)

- MaxPool2D layer ( pool_size=(2,2))

- Dropout layer (rate=0.25)

- Flatten layer to compress layers into 1 dimension

- Dense layer (500, activation=”relu”)

- Dropout layer (rate=0.5)

- Dense layer (43, activation=”softmax”)

class Net: @staticmethod def build(width, height, depth, classes): model = Sequential() inputShape = (height, width, depth) if K.image_data_format() == 'channels_first': inputShape = (depth, heigth, width) model = Sequential() model.add(Conv2D(filters=32, kernel_size=(5,5), activation='relu', input_shape=inputShape)) model.add(Conv2D(filters=32, kernel_size=(5 ,5), activation='relu')) model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(rate=0.25)) model.add(Conv2D(filters=64, kernel_size=( 3, 3), activation='relu')) model.add(Conv2D(filters=64, kernel_size=(3, 3), activation='relu')) model.add(MaxPool2D(pool_size=(2, 2) )) model.add(Dropout(rate=0.25)) model.add(Flatten()) model.add(Dense(500, activation='relu')) model.add(Dropout(rate=0.5)) model.add (Dense(classes, activation='softmax')) return model

Flaws

OMR also has some disadvantages and limitations. If the user wants to process large amounts of text, then OMR adds complexity to data collection (Green, 2000). Data may be missed during the scanning process, and misaligned or unnumbered pages may be scanned in the wrong order. If the fuses are not in place, the page may be rescanned, resulting in duplicate and corrupted data (Bergeron, 1998).

OMR provides fast and accurate data collection and output; however, it does not meet everyone's needs.

As a result of the widespread use and ease of use of OMRs, uniform exams are predominantly composed of multiple choice questions, changing the nature of what is tested.

Source: https://www.aclweb.org

Areas of use

OMR has various areas that provide the format required by the client. These areas include:

- Multiple choice, where there are several options, but you only need to choose one, ABCDE, 12345, completely disagree, disagree, abstain, agree, completely agree, etc.

- grid, mark spaces or lines are arranged in a grid format so that the user can fill in the phone number, name, ID and so on.

- add, bring answers under one meaning

- answer "yes" or "no" to all questions (boolean)

- answer “yes” or “no” to only one (binary)